The future of AI search and the challenges for Bing and Bard

AI-assisted search promises a new era of tech, but Microsoft and Google face many challenges, such as fake information, social conflicts, and declining ad revenue.

AI helpers or bullshit generators?

The biggest problem for AI chatbots and search engines is bullshit

The “one true answer” question

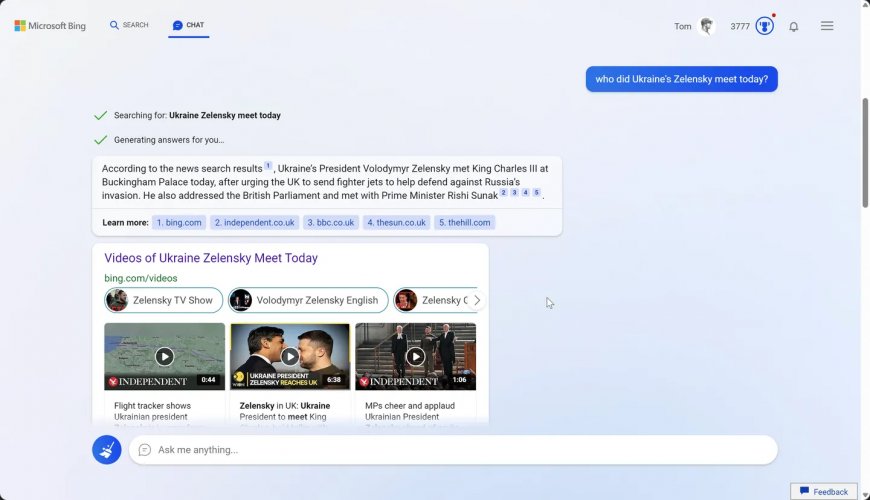

Bullshit and bias are challenges in their own right, but they’re also exacerbated by the “one true answer” problem — the tendency for search engines to offer singular, apparently definitive answers.

This has been an issue ever since Google started offering “snippets” more than a decade ago. These are the boxes that appear above search results and, in their time, have made all sorts of embarrassing and dangerous mistakes: from incorrectly naming US presidents as members of the KKK to advising that someone suffering from a seizure should be held down on the floor (the exact opposite of correct medical procedure).

As researchers Chirag Shah and Emily M. Bender argued in a paper on the topic, “Situating Search,” the introduction of chatbot interfaces has the potential to exacerbate this problem. Not only do chatbots tend to offer singular answers but also their authority is enhanced by the mystique of AI — their answers collated from multiple sources, often without proper attribution. It’s worth remembering how much of a change this is from lists of links, each encouraging you to click through and interrogate under your own steam.

There are design choices that can mitigate these problems, of course. Bing’s AI interface footnotes its sources, and this week, Google stressed that, as it uses more AI to answer queries, it’ll try to adopt a principle called NORA, or “no one right answer.” But these efforts are undermined by the insistence of both companies that AI will deliver answers better and faster. So far, the direction of travel for search is clear: scrutinize sources less and trust what you’re told more.

Jailbreaking AI

Jailbreak a chatbot, and you have a free tool for mischief

Here come the AI culture wars

Burning cash and compute

average is probably single-digits cents per chat; trying to figure out more precisely and also how we can optimize it — Sam Altman (@sama) December 5, 2022