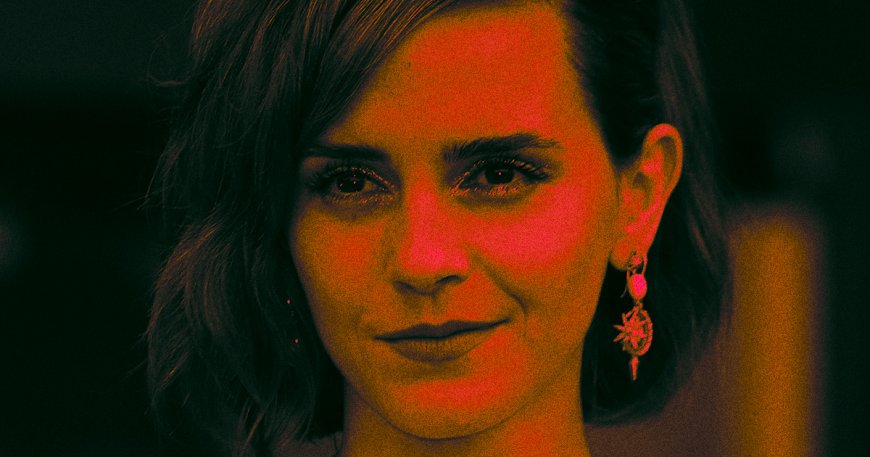

The Dark Side of Voice Cloning: Emma Watson's Experience Highlights the Dangers

Deepfake audio is on the rise and Emma Watson's experience serves as a warning. Learn about the dangers of voice cloning and how to protect yourself.

Since finding fame at the tender age of ten, through her role as Hermione Grainger in the Harry Potter movie franchise, Emma Watson has become a household name. She's also, sadly, become one of the most deepfaked celebrities online.

This word – 'deepfake' – is slowly embedding itself into our everyday lexicon as concerned campaigners speak out about deepfaking (a form of AI-generated synthetic media that can make it look like anyone is doing anything in a video, or still image) to raise awareness and encourage strict laws to be put in place. But, at this early stage, where deepfakes are still trickling into mass public consciousness, they're mostly still associated with political takedowns or women being edited into pornographic scenarios. Largely, without their consent.

But there's more we should be concerned and talking about besides deepfake videos, namely: deepfaked audio.

Can you deepfake someone's voice?

Over 90% of deepfaked content online is sexual in nature and features a female victim (be they a celebrity or member of the general public), most are non-consensual. Equally as alarming is the fact that many of the famous faces who are popular on deepfake websites, that receive millions of hits a month, also entered the spotlight at a young age – and sometimes, images of them whilst underage are used to generate pornographic videos.

Today, in the year 2023, generally we're all aware that Photoshopped images can be (and are often) created and posted minus any disclaimer. We know to look out for them on Instagram, via curvy door frames or wobbly floor tiles. The Kardashians are often called out for their Photoshop fails. We're also slowly coming around to the idea that videos can be distorted too, thanks to the likes of Nicki Minaj's 'slimming filter' glitch and ITVX's new series, Deep Fake Neighbour Wars, which tenuously sees the likes of 'Idris Elba', 'Greta Thunberg' and 'Kim Kardashian' being argumentative neighbours. (The celebs are AI-generated and combined with the bodies of impersonators, who also do voiceovers.)

How on earth ITVX has been able to get away with using the likenesses of these celebrities is curious and creepy in equal measure, but, presumably, the sheer lack of solid legislation that's in place (something the government have promised will be partially addressed in the new Online Safety Bill) surrounding deepfaking someone's image, with or without their consent, is part of the reason why. When asked, a spokesperson from ITVX said that: "Comedy entertainment shows with impressionists have been on our screens since television began, the difference with our show is that we're using the very latest AI technology to bring an exciting fresh perspective to the genre." They also run a disclaimer at the start of each episode saying none of the celebs involved have consented, and have a 'Deep Fake' watermark on the screen throughout.

But, when it comes to discussing the rules surrounding deepfaked audio – rules are even flimsier – and now, we're seeing the damage voice-cloning can do play out just as quickly. Sadly, it's something Emma Watson has once again been targeted with, on a terrifying scale. Recently, an uncanny AI-generated voice clip of 'her' reading out Adolf Hitler's Mein Kampf (in which the murderous dictator outlines his political manifesto) was posted onto 4chan, a chat forum site known to have dark undercurrents and a strong incel community, showing just how powerful (and accessible) this tech is becoming. On a bigger scale, in 2020, well-versed criminals even used deepfake audio to trick a bank manager in Hong Kong into authorising a $35 million transfer.

Concerns have also been raised about the use of deepfaked audio (or 'deep voicing') when it comes to blackmailing individuals, or the possibility of falsified clips being put forward as evidence during legal trials. So, what can we do about it all?

Technology site Motherboard suggests that the clip of Watson was made using ElevenLabs' (an "AI speech platform promising to revolutionize audio storytelling" which is still in the beta testing stage) voice-simulating programme. Other publications have backed this, with The Verge also experimenting and reporting being able to puppeteer Joe Biden's voice into reading a script they'd written in a number of minutes. In response to these concerns being raised in the press and on social media, ElevenLabs later posted a thread on Twitter saying that they would be clamping down on those wanting to use their voice-copying AI anonymously, or for harmful reasons.

"We’ve always had the ability to trace any generated audio clip back to a specific user. We'll now go a step further and release a tool which lets anyone verify whether a particular sample was generated using our technology and report misuse," a statement posted 31 January read. "Almost all of the malicious content was generated by free, anonymous accounts. Additional identity verification is necessary. For this reason, VoiceLab will only be available on paid tiers. This change will be rolled out ASAP."

It continued on to say: "We're tracking harmful content that gets reported to us back to the accounts it originated from and we're banning those accounts for violating our policy [...] We will continue to monitor the situation."

As for possible positive uses for deepfaked audio? Some have suggested it could help transform scripts into podcasts or radio plays, be beneficial for creating audiobooks and be of use for visually impaired users. The likes of ElevenLabs also have non-celebrity voices available to deliver any text/wording required, with human-sounding tone, inclination and speech pattern (which is to say: robots simply do not sound like robots anymore) – but they're far from the only company dabbling in this field, or offering users the chance to create their own deepvoiced scenarios.

This is just another big societal wake-up call: deepfake technology – and AI generally – will become further entrenched in every aspect of our lives, and that makes it more important than ever to remain vigilant to it. The powers that be in the tech sphere must better control the beasts they have built, and the government must hold them accountable for doing so.